reationists occasionally charge that

evolution is useless as a scientific theory because it

produces no practical benefits and has no relevance to

daily life. However, the evidence of biology alone shows

that this claim is untrue. There are numerous natural

phenomena for which evolution gives us a sound theoretical

underpinning. To name just one, the observed development of

resistance - to insecticides in crop pests, to antibiotics

in bacteria, to chemotherapy in cancer cells, and to

anti-retroviral drugs in viruses such as HIV - is a

straightforward consequence of the laws of mutation and

selection, and understanding these principles has helped us

to craft strategies for dealing with these harmful

organisms. The evolutionary postulate of common descent has

aided the development of new medical drugs and techniques

by giving researchers a good idea of which organisms they

should experiment on to obtain results that are most likely

to be relevant to humans. Finally, the principle of

selective breeding has been used to great effect by humans

to create customized organisms unlike anything found in

nature for their own benefit. The canonical example, of

course, is the many varieties of domesticated dogs (breeds

as diverse as bulldogs, chihuahuas and dachshunds have been

produced from wolves in only a few thousand years), but

less well-known examples include cultivated maize (very

different from its wild relatives, none of which have the

familiar "ears" of human-grown corn), goldfish (like dogs,

we have bred varieties that look dramatically different

from the wild type), and dairy cows (with immense udders

far larger than would be required just for nourishing

offspring).

reationists occasionally charge that

evolution is useless as a scientific theory because it

produces no practical benefits and has no relevance to

daily life. However, the evidence of biology alone shows

that this claim is untrue. There are numerous natural

phenomena for which evolution gives us a sound theoretical

underpinning. To name just one, the observed development of

resistance - to insecticides in crop pests, to antibiotics

in bacteria, to chemotherapy in cancer cells, and to

anti-retroviral drugs in viruses such as HIV - is a

straightforward consequence of the laws of mutation and

selection, and understanding these principles has helped us

to craft strategies for dealing with these harmful

organisms. The evolutionary postulate of common descent has

aided the development of new medical drugs and techniques

by giving researchers a good idea of which organisms they

should experiment on to obtain results that are most likely

to be relevant to humans. Finally, the principle of

selective breeding has been used to great effect by humans

to create customized organisms unlike anything found in

nature for their own benefit. The canonical example, of

course, is the many varieties of domesticated dogs (breeds

as diverse as bulldogs, chihuahuas and dachshunds have been

produced from wolves in only a few thousand years), but

less well-known examples include cultivated maize (very

different from its wild relatives, none of which have the

familiar "ears" of human-grown corn), goldfish (like dogs,

we have bred varieties that look dramatically different

from the wild type), and dairy cows (with immense udders

far larger than would be required just for nourishing

offspring).

Critics might charge that creationists can explain these

things without recourse to evolution. For example,

creationists often explain the development of resistance to

antibiotic agents in bacteria, or the changes wrought in

domesticated animals by artificial selection, by presuming

that God decided to create organisms in fixed groups,

called "kinds" or baramin. Though natural

microevolution or human-guided artificial selection can

bring about different varieties within the originally

created "dog-kind," or "cow-kind," or "bacteria-kind" (!),

no amount of time or genetic change can transform one

"kind" into another. However, exactly how the creationists

determine what a "kind" is, or what mechanism prevents

living things from evolving beyond its boundaries, is

invariably never explained.

But in the last few decades, the continuing advance of

modern technology has brought about something new.

Evolution is now producing practical benefits in a very

different field, and this time, the creationists cannot

claim that their explanation fits the facts just as well.

This field is computer science, and the benefits come from

a programming strategy called genetic algorithms.

This essay will explain what genetic algorithms are and

will show how they are relevant to the

evolution/creationism debate.

What is a genetic algorithm?

|

|

Concisely stated, a genetic algorithm (or GA for short)

is a programming technique that mimics biological evolution

as a problem-solving strategy. Given a specific problem to

solve, the input to the GA is a set of potential solutions

to that problem, encoded in some fashion, and a metric

called a fitness function that allows each candidate

to be quantitatively evaluated. These candidates may be

solutions already known to work, with the aim of the GA

being to improve them, but more often they are generated at

random.

The GA then evaluates each candidate according to the

fitness function. In a pool of randomly generated

candidates, of course, most will not work at all, and these

will be deleted. However, purely by chance, a few may hold

promise - they may show activity, even if only weak and

imperfect activity, toward solving the problem.

These promising candidates are kept and allowed to

reproduce. Multiple copies are made of them, but the copies

are not perfect; random changes are introduced during the

copying process. These digital offspring then go on to the

next generation, forming a new pool of candidate solutions,

and are subjected to a second round of fitness evaluation.

Those candidate solutions which were worsened, or made no

better, by the changes to their code are again deleted; but

again, purely by chance, the random variations introduced

into the population may have improved some individuals,

making them into better, more complete or more efficient

solutions to the problem at hand. Again these winning

individuals are selected and copied over into the next

generation with random changes, and the process repeats.

The expectation is that the average fitness of the

population will increase each round, and so by repeating

this process for hundreds or thousands of rounds, very good

solutions to the problem can be discovered.

As astonishing and counterintuitive as it may seem to

some, genetic algorithms have proven to be an enormously

powerful and successful problem-solving strategy,

dramatically demonstrating the power of evolutionary

principles. Genetic algorithms have been used in a wide

variety of fields to evolve solutions to problems as

difficult as or more difficult than those faced by human

designers. Moreover, the solutions they come up with are

often more efficient, more elegant, or more complex than

anything comparable a human engineer would produce. In some

cases, genetic algorithms have come up with solutions that

baffle the programmers who wrote the algorithms in the

first place!

Methods of representation

Before a genetic algorithm can be put to work on any

problem, a method is needed to encode potential solutions

to that problem in a form that a computer can process. One

common approach is to encode solutions as binary strings:

sequences of 1's and 0's, where the digit at each position

represents the value of some aspect of the solution.

Another, similar approach is to encode solutions as arrays

of integers or decimal numbers, with each position again

representing some particular aspect of the solution. This

approach allows for greater precision and complexity than

the comparatively restricted method of using binary numbers

only and often "is intuitively closer to the problem space"

(Fleming and Purshouse 2002, p.

1228).

This technique was used, for example, in the work of

Steffen Schulze-Kremer, who wrote a genetic algorithm to

predict the three-dimensional structure of a protein based

on the sequence of amino acids that go into it (Mitchell 1996, p. 62).

Schulze-Kremer's GA used real-valued numbers to represent

the so-called "torsion angles" between the peptide bonds

that connect amino acids. (A protein is made up of a

sequence of basic building blocks called amino acids, which

are joined together like the links in a chain. Once all the

amino acids are linked, the protein folds up into a complex

three-dimensional shape based on which amino acids attract

each other and which ones repel each other. The shape of a

protein determines its function.) Genetic algorithms for

training neural networks

often use this method of encoding also.

A third approach is to represent individuals in a GA as

strings of letters, where each letter again stands for a

specific aspect of the solution. One example of this

technique is Hiroaki Kitano's "grammatical encoding"

approach, where a GA was put to the task of evolving a

simple set of rules called a context-free grammar that was

in turn used to generate neural networks for a variety of

problems (Mitchell 1996, p.

74).

The virtue of all three of these methods is that they

make it easy to define operators that cause the random

changes in the selected candidates: flip a 0 to a 1 or vice

versa, add or subtract from the value of a number by a

randomly chosen amount, or change one letter to another.

(See the section on Methods of

change for more detail about the genetic operators.)

Another strategy, developed principally by John Koza of

Stanford University and called genetic programming,

represents programs as branching data structures called

trees (Koza et al. 2003, p. 35). In

this approach, random changes can be brought about by

changing the operator or altering the value at a given node

in the tree, or replacing one subtree with another.

Figure 1: Three simple program

trees of the kind normally used in genetic programming. The

mathematical expression that each one represents is given

underneath.

It is important to note that evolutionary algorithms do

not need to represent candidate solutions as data strings

of fixed length. Some do represent them in this way, but

others do not; for example, Kitano's grammatical encoding

discussed above can be efficiently scaled to create large

and complex neural networks, and Koza's genetic programming

trees can grow arbitrarily large as necessary to solve

whatever problem they are applied to.

Methods of selection

There are many different techniques which a genetic

algorithm can use to select the individuals to be copied

over into the next generation, but listed below are some of

the most common methods. Some of these methods are mutually

exclusive, but others can be and often are used in

combination.

Elitist selection: The most fit members of each

generation are guaranteed to be selected. (Most GAs do not

use pure elitism, but instead use a modified form where the

single best, or a few of the best, individuals from each

generation are copied into the next generation just in case

nothing better turns up.)

Fitness-proportionate selection: More fit

individuals are more likely, but not certain, to be

selected.

Roulette-wheel selection: A form of

fitness-proportionate selection in which the chance of an

individual's being selected is proportional to the amount

by which its fitness is greater or less than its

competitors' fitness. (Conceptually, this can be

represented as a game of roulette - each individual gets a

slice of the wheel, but more fit ones get larger slices

than less fit ones. The wheel is then spun, and whichever

individual "owns" the section on which it lands each time

is chosen.)

Scaling selection: As the average fitness of the

population increases, the strength of the selective

pressure also increases and the fitness function becomes

more discriminating. This method can be helpful in making

the best selection later on when all individuals have

relatively high fitness and only small differences in

fitness distinguish one from another.

Tournament selection: Subgroups of individuals

are chosen from the larger population, and members of each

subgroup compete against each other. Only one individual

from each subgroup is chosen to reproduce.

Rank selection: Each individual in the population

is assigned a numerical rank based on fitness, and

selection is based on this ranking rather than absolute

differences in fitness. The advantage of this method is

that it can prevent very fit individuals from gaining

dominance early at the expense of less fit ones, which

would reduce the population's genetic diversity and might

hinder attempts to find an acceptable solution.

Generational selection: The offspring of the

individuals selected from each generation become the entire

next generation. No individuals are retained between

generations.

Steady-state selection: The offspring of the

individuals selected from each generation go back into the

pre-existing gene pool, replacing some of the less fit

members of the previous generation. Some individuals are

retained between generations.

Hierarchical selection: Individuals go through

multiple rounds of selection each generation. Lower-level

evaluations are faster and less discriminating, while those

that survive to higher levels are evaluated more

rigorously. The advantage of this method is that it reduces

overall computation time by using faster, less selective

evaluation to weed out the majority of individuals that

show little or no promise, and only subjecting those who

survive this initial test to more rigorous and more

computationally expensive fitness evaluation.

Methods of change

Once selection has chosen fit individuals, they must be

randomly altered in hopes of improving their fitness for

the next generation. There are two basic strategies to

accomplish this. The first and simplest is called

mutation. Just as mutation in living things changes

one gene to another, so mutation in a genetic algorithm

causes small alterations at single points in an

individual's code.

The second method is called crossover, and

entails choosing two individuals to swap segments of their

code, producing artificial "offspring" that are

combinations of their parents. This process is intended to

simulate the analogous process of recombination that occurs

to chromosomes during sexual reproduction. Common forms of

crossover include single-point crossover, in which a

point of exchange is set at a random location in the two

individuals' genomes, and one individual contributes all

its code from before that point and the other contributes

all its code from after that point to produce an offspring,

and uniform crossover, in which the value at any

given location in the offspring's genome is either the

value of one parent's genome at that location or the value

of the other parent's genome at that location, chosen with

50/50 probability.

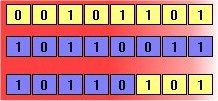

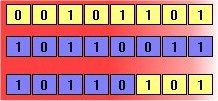

Figure 2: Crossover and mutation.

The above diagrams illustrate the effect of each of these

genetic operators on individuals in a population of 8-bit

strings. The upper diagram shows two individuals undergoing

single-point crossover; the point of exchange is set

between the fifth and sixth positions in the genome,

producing a new individual that is a hybrid of its

progenitors. The second diagram shows an individual

undergoing mutation at position 4, changing the 0 at that

position in its genome to a 1.

Other problem-solving techniques

With the rise of artificial life computing and the

development of heuristic methods, other computerized

problem-solving techniques have emerged that are in some

ways similar to genetic algorithms. This section explains

some of these techniques, in what ways they resemble GAs

and in what ways they differ.

- Neural networks

A neural network, or neural net for short, is a

problem-solving method based on a computer model of how

neurons are connected in the brain. A neural network

consists of layers of processing units called nodes joined

by directional links: one input layer, one output layer,

and zero or more hidden layers in between. An initial

pattern of input is presented to the input layer of the

neural network, and nodes that are stimulated then transmit

a signal to the nodes of the next layer to which they are

connected. If the sum of all the inputs entering one of

these virtual neurons is higher than that neuron's

so-called activation threshold, that neuron itself

activates, and passes on its own signal to neurons in the

next layer. The pattern of activation therefore spreads

forward until it reaches the output layer and is there

returned as a solution to the presented input. Just as in

the nervous system of biological organisms, neural networks

learn and fine-tune their performance over time via

repeated rounds of adjusting their thresholds until the

actual output matches the desired output for any given

input. This process can be supervised by a human

experimenter or may run automatically using a learning

algorithm (Mitchell 1996, p.

52). Genetic algorithms have been used both to build and to

train neural networks.

Figure 3: A simple feedforward neural network, with

one input layer consisting of four neurons, one hidden

layer consisting of three neurons, and one output layer

consisting of four neurons. The number on each neuron

represents its activation threshold: it will only fire if

it receives at least that many inputs. The diagram shows

the neural network being presented with an input string and

shows how activation spreads forward through the network to

produce an output.

- Hill-climbing

Similar to genetic algorithms, though more systematic and

less random, a hill-climbing algorithm begins with one

initial solution to the problem at hand, usually chosen at

random. The string is then mutated, and if the mutation

results in higher fitness for the new solution than for the

previous one, the new solution is kept; otherwise, the

current solution is retained. The algorithm is then

repeated until no mutation can be found that causes an

increase in the current solution's fitness, and this

solution is returned as the result (Koza et al. 2003, p. 59). (To

understand where the name of this technique comes from,

imagine that the space of all possible solutions to a given

problem is represented as a three-dimensional contour

landscape. A given set of coordinates on that landscape

represents one particular solution. Those solutions that

are better are higher in altitude, forming hills and peaks;

those that are worse are lower in altitude, forming

valleys. A "hill-climber" is then an algorithm that starts

out at a given point on the landscape and moves inexorably

uphill.) Hill-climbing is what is known as a greedy

algorithm, meaning it always makes the best choice

available at each step in the hope that the overall best

result can be achieved this way. By contrast, methods such

as genetic algorithms and simulated annealing, discussed

below, are not greedy; these methods sometimes make

suboptimal choices in the hopes that they will lead

to better solutions later on.

- Simulated annealing

Another optimization technique similar to evolutionary

algorithms is known as simulated annealing. The idea

borrows its name from the industrial process of

annealing in which a material is heated to above a

critical point to soften it, then gradually cooled in order to erase

defects in its crystalline structure, producing a more

stable and regular lattice arrangement of atoms (Haupt and Haupt 1998, p. 16). In

simulated annealing, as in genetic algorithms, there is a

fitness function that defines a fitness landscape; however,

rather than a population of candidates as in GAs, there is

only one candidate solution. Simulated annealing also adds

the concept of "temperature", a global numerical quantity

which gradually decreases over time. At each step of the

algorithm, the solution mutates (which is equivalent to

moving to an adjacent point of the fitness landscape). The

fitness of the new solution is then compared to the fitness

of the previous solution; if it is higher, the new solution

is kept. Otherwise, the algorithm makes a decision whether

to keep or discard it based on temperature. If the

temperature is high, as it is initially, even changes that

cause significant decreases in fitness may be kept and used

as the basis for the next round of the algorithm, but as

temperature decreases, the algorithm becomes more and more

inclined to only accept fitness-increasing changes.

Finally, the temperature reaches zero and the system

"freezes"; whatever configuration it is in at that point

becomes the solution. Simulated annealing is often used for

engineering design applications such as determining the

physical layout of components on a computer chip (Kirkpatrick, Gelatt and Vecchi

1983).

The earliest instances of what might today be called

genetic algorithms appeared in the late 1950s and early

1960s, programmed on computers by evolutionary biologists

who were explicitly seeking to model aspects of natural

evolution. It did not occur to any of them that this

strategy might be more generally applicable to artificial

problems, but that recognition was not long in coming:

"Evolutionary computation was definitely in the air in the

formative days of the electronic computer" (Mitchell 1996, p.2). By 1962,

researchers such as G.E.P. Box, G.J. Friedman, W.W. Bledsoe

and H.J. Bremermann had all independently developed

evolution-inspired algorithms for function optimization and

machine learning, but their work attracted little followup.

A more successful development in this area came in 1965,

when Ingo Rechenberg, then of the Technical University of

Berlin, introduced a technique he called evolution

strategy, though it was more similar to hill-climbers

than to genetic algorithms. In this technique, there was no

population or crossover; one parent was mutated to produce

one offspring, and the better of the two was kept and

became the parent for the next round of mutation (Haupt and Haupt 1998, p.146). Later

versions introduced the idea of a population. Evolution

strategies are still employed today by engineers and

scientists, especially in Germany.

The next important development in the field came in

1966, when L.J. Fogel, A.J. Owens and M.J. Walsh introduced

in America a technique they called evolutionary

programming. In this method, candidate solutions to

problems were represented as simple finite-state machines;

like Rechenberg's evolution strategy, their algorithm

worked by randomly mutating one of these simulated machines

and keeping the better of the two (Mitchell 1996, p.2; Goldberg 1989, p.105). Also like

evolution strategies, a broader formulation of the

evolutionary programming technique is still an area of

ongoing research today. However, what was still lacking in

both these methodologies was recognition of the importance

of crossover.

As early as 1962, John Holland's work on adaptive

systems laid the foundation for later developments; most

notably, Holland was also the first to explicitly propose

crossover and other recombination operators. However, the

seminal work in the field of genetic algorithms came in

1975, with the publication of the book Adaptation in

Natural and Artificial Systems. Building on earlier

research and papers both by Holland himself and by

colleagues at the University of Michigan, this book was the

first to systematically and rigorously present the concept

of adaptive digital systems using mutation, selection and

crossover, simulating processes of biological evolution, as

a problem-solving strategy. The book also attempted to put

genetic algorithms on a firm theoretical footing by

introducing the notion of schemata (Mitchell 1996, p.3; Haupt and Haupt 1998, p.147). That

same year, Kenneth De Jong's important dissertation

established the potential of GAs by showing that they could

perform well on a wide variety of test functions, including

noisy, discontinuous, and multimodal search landscapes (Goldberg 1989, p.107).

These foundational works established more widespread

interest in evolutionary computation. By the early to

mid-1980s, genetic algorithms were being applied to a broad

range of subjects, from abstract mathematical problems like

bin-packing and graph coloring to tangible engineering

issues such as pipeline flow control, pattern recognition

and classification, and structural optimization (Goldberg 1989, p. 128).

At first, these applications were mainly theoretical.

However, as research continued to proliferate, genetic

algorithms migrated into the commercial sector, their rise

fueled by the exponential growth of computing power and the

development of the Internet. Today, evolutionary

computation is a thriving field, and genetic algorithms are

"solving problems of everyday interest" (Haupt and Haupt 1998, p.147) in areas

of study as diverse as stock market prediction and

portfolio planning, aerospace engineering, microchip

design, biochemistry and molecular biology, and scheduling

at airports and assembly lines. The power of evolution has

touched virtually any field one cares to name, shaping the

world around us invisibly in countless ways, and new uses

continue to be discovered as research is ongoing. And at

the heart of it all lies nothing more than Charles Darwin's

simple, powerful insight: that the random chance of

variation, coupled with the law of selection, is a

problem-solving technique of immense power and nearly

unlimited application.

What are the strengths of GAs?

|

|

- The first and most important

point is that genetic algorithms are intrinsically

parallel. Most other algorithms are serial and can only

explore the solution space to a problem in one direction at

a time, and if the solution they discover turns out to be

suboptimal, there is nothing to do but abandon all work

previously completed and start over. However, since GAs

have multiple offspring, they can explore the solution

space in multiple directions at once. If one path turns out

to be a dead end, they can easily eliminate it and continue

work on more promising avenues, giving them a greater

chance each run of finding the optimal solution.

However, the advantage of parallelism goes beyond this.

Consider the following: All the 8-digit binary strings

(strings of 0's and 1's) form a search space, which can be

represented as ******** (where the * stands for "either 0

or 1"). The string 01101010 is one member of this space.

However, it is also a member of the space 0*******,

the space 01******, the space 0******0, the space 0*1*1*1*,

the space 01*01**0, and so on. By evaluating the fitness of

this one particular string, a genetic algorithm would be

sampling each of these many spaces to which it belongs.

Over many such evaluations, it would build up an

increasingly accurate value for the average fitness

of each of these spaces, each of which has many members.

Therefore, a GA that explicitly evaluates a small number of

individuals is implicitly evaluating a much larger group of

individuals - just as a pollster who asks questions of a

certain member of an ethnic, religious or social group

hopes to learn something about the opinions of all members

of that group, and therefore can reliably predict national

opinion while sampling only a small percentage of the

population. In the same way, the GA can "home in" on the

space with the highest-fitness individuals and find the

overall best one from that group. In the context of

evolutionary algorithms, this is known as the Schema

Theorem, and is the "central advantage" of a GA over other

problem-solving methods (Holland

1992, p. 68; Mitchell 1996,

p.28-29; Goldberg 1989,

p.20).

- Due to the parallelism that

allows them to implicitly evaluate many schema at once,

genetic algorithms are particularly well-suited to solving

problems where the space of all potential solutions is

truly huge - too vast to search exhaustively in any

reasonable amount of time. Most problems that fall into

this category are known as "nonlinear". In a linear

problem, the fitness of each component is independent, so

any improvement to any one part will result in an

improvement of the system as a whole. Needless to say, few

real-world problems are like this. Nonlinearity is the

norm, where changing one component may have ripple effects

on the entire system, and where multiple changes that

individually are detrimental may lead to much greater

improvements in fitness when combined. Nonlinearity results

in a combinatorial explosion: the space of 1,000-digit

binary strings can be exhaustively searched by evaluating

only 2,000 possibilities if the problem is linear, whereas

if it is nonlinear, an exhaustive search requires

evaluating 21000 possibilities -

a number that would take over 300 digits to write out in

full.

Fortunately, the implicit parallelism of a GA allows it to

surmount even this enormous number of possibilities,

successfully finding optimal or very good results in a

short period of time after directly sampling only small

regions of the vast fitness landscape (Forrest 1993, p. 877). For example,

a genetic algorithm developed jointly by engineers from

General Electric and Rensselaer Polytechnic Institute

produced a high-performance jet engine turbine design that

was three times better than a human-designed configuration

and 50% better than a configuration designed by an expert

system by successfully navigating a solution space

containing more than 10387

possibilities. Conventional methods for designing such

turbines are a central part of engineering projects that

can take up to five years and cost over $2 billion; the

genetic algorithm discovered this solution after two days

on a typical engineering desktop workstation (Holland 1992, p.72).

- Another notable strength of

genetic algorithms is that they perform well in problems

for which the fitness landscape is complex - ones where the

fitness function is discontinuous, noisy, changes over

time, or has many local optima. Most practical problems

have a vast solution space, impossible to search

exhaustively; the challenge then becomes how to avoid the

local optima - solutions that are better than all the

others that are similar to them, but that are not as good

as different ones elsewhere in the solution space. Many

search algorithms can become trapped by local optima: if

they reach the top of a hill on the fitness landscape, they

will discover that no better solutions exist nearby and

conclude that they have reached the best one, even though

higher peaks exist elsewhere on the map.

Evolutionary algorithms, on the other hand, have proven to

be effective at escaping local optima and discovering the

global optimum in even a very rugged and complex fitness

landscape. (It should be noted that, in reality, there is

usually no way to tell whether a given solution to a

problem is the one global optimum or just a very high local

optimum. However, even if a GA does not always deliver a

provably perfect solution to a problem, it can almost

always deliver at least a very good solution.) All four of

a GA's major components - parallelism, selection, mutation,

and crossover - work together to accomplish this. In the

beginning, the GA generates a diverse initial population,

casting a "net" over the fitness landscape. (Koza (2003, p. 506) compares this to an army

of parachutists dropping onto the landscape of a problem's

search space, with each one being given orders to find the

highest peak.) Small mutations enable each individual to

explore its immediate neighborhood, while selection focuses

progress, guiding the algorithm's offspring uphill to more

promising parts of the solution space (Holland 1992, p. 68).

However, crossover is the key element that distinguishes

genetic algorithms from other methods such as hill-climbers

and simulated annealing. Without crossover, each individual

solution is on its own, exploring the search space in its

immediate vicinity without reference to what other

individuals may have discovered. However, with crossover in

place, there is a transfer of information between

successful candidates - individuals can benefit from what

others have learned, and schemata can be mixed and

combined, with the potential to produce an offspring that

has the strengths of both its parents and the weaknesses of

neither. This point is illustrated in Koza et al. 1999, p.486, where the

authors discuss a problem of synthesizing a lowpass filter

using genetic programming. In one generation, two parent

circuits were selected to undergo crossover; one parent had

good topology (components such as inductors and capacitors

in the right places) but bad sizing (values of inductance

and capacitance for its components that were far too low).

The other parent had bad topology, but good sizing. The

result of mating the two through crossover was an offspring

with the good topology of one parent and the good sizing of

the other, resulting in a substantial improvement in

fitness over both its parents.

The problem of finding the global optimum in a space with

many local optima is also known as the dilemma of

exploration vs. exploitation, "a classic problem for all

systems that can adapt and learn" (Holland 1992, p. 69). Once an

algorithm (or a human designer) has found a problem-solving

strategy that seems to work satisfactorily, should it

concentrate on making the best use of that strategy, or

should it search for others? Abandoning a proven strategy

to look for new ones is almost guaranteed to involve losses

and degradation of performance, at least in the short term.

But if one sticks with a particular strategy to the

exclusion of all others, one runs the risk of not

discovering better strategies that exist but have not yet

been found. Again, genetic algorithms have shown themselves

to be very good at striking this balance and discovering

good solutions with a reasonable amount of time and

computational effort.

- Another area in which

genetic algorithms excel is their ability to manipulate

many parameters simultaneously (Forrest 1993, p. 874). Many

real-world problems cannot be stated in terms of a single

value to be minimized or maximized, but must be expressed

in terms of multiple objectives, usually with tradeoffs

involved: one can only be improved at the expense of

another. GAs are very good at solving such problems: in

particular, their use of parallelism enables them to

produce multiple equally good solutions to the same

problem, possibly with one candidate solution optimizing

one parameter and another candidate optimizing a different

one (Haupt and Haupt 1998, p.17),

and a human overseer can then select one of these

candidates to use. If a particular solution to a

multiobjective problem optimizes one parameter to a degree

such that that parameter cannot be further improved without

causing a corresponding decrease in the quality of some

other parameter, that solution is called Pareto

optimal or non-dominated (Coello 2000, p. 112).

- Finally, one of the

qualities of genetic algorithms which might at first appear

to be a liability turns out to be one of their strengths:

namely, GAs know nothing about the problems they are

deployed to solve. Instead of using previously known

domain-specific information to guide each step and making

changes with a specific eye towards improvement, as human

designers do, they are "blind watchmakers" (Dawkins 1996); they make

random changes to their candidate solutions and then

use the fitness function to determine whether those changes

produce an improvement.

The virtue of this technique is that it allows genetic

algorithms to start out with an open mind, so to speak.

Since its decisions are based on randomness, all

possible search pathways are theoretically open to a GA; by

contrast, any problem-solving strategy that relies on prior

knowledge must inevitably begin by ruling out many pathways

a priori, therefore missing any novel solutions that

may exist there (Koza et al. 1999,

p. 547). Lacking preconceptions based on established

beliefs of "how things should be done" or what "couldn't

possibly work", GAs do not have this problem. Similarly,

any technique that relies on prior knowledge will break

down when such knowledge is not available, but again, GAs

are not adversely affected by ignorance (Goldberg 1989, p. 23). Through

their components of parallelism, crossover and mutation,

they can range widely over the fitness landscape, exploring

regions which intelligently produced algorithms might have

overlooked, and potentially uncovering solutions of

startling and unexpected creativity that might never have

occurred to human designers. One vivid illustration of this

is the rediscovery, by genetic programming, of the concept

of negative feedback - a principle crucial to many

important electronic components today, but one that, when

it was first discovered, was denied a patent for nine years

because the concept was so contrary to established beliefs

(Koza et al. 2003, p. 413).

Evolutionary algorithms, of course, are neither aware nor

concerned whether a solution runs counter to established

beliefs - only whether it works.

What are the limitations of GAs?

|

|

Although genetic algorithms have proven to be an

efficient and powerful problem-solving strategy, they are

not a panacea. GAs do have certain limitations; however, it

will be shown that all of these can be overcome and none of

them bear on the validity of biological evolution.

- The first, and most

important, consideration in creating a genetic algorithm is

defining a representation for the problem. The language

used to specify candidate solutions must be robust; i.e.,

it must be able to tolerate random changes such that fatal

errors or nonsense do not consistently result.

There are two main ways of achieving this. The first, which

is used by most genetic algorithms, is to define

individuals as lists of numbers - binary-valued,

integer-valued, or real-valued - where each number

represents some aspect of a candidate solution. If the

individuals are binary strings, 0 or 1 could stand for the

absence or presence of a given feature. If they are lists

of numbers, these numbers could represent many different

things: the weights of the links in a neural network, the

order of the cities visited in a given tour, the spatial

placement of electronic components, the values fed into a

controller, the torsion angles of peptide bonds in a

protein, and so on. Mutation then entails changing these

numbers, flipping bits or adding or subtracting random

values. In this case, the actual program code does not

change; the code is what manages the simulation and keeps

track of the individuals, evaluating their fitness and

perhaps ensuring that only values realistic and possible

for the given problem result.

In another method, genetic programming, the actual program

code does change. As discussed in the section Methods of representation,

GP represents individuals as executable trees of code that

can be mutated by changing or swapping subtrees. Both of

these methods produce representations that are robust

against mutation and can represent many different kinds of

problems, and as discussed in the section Some specific examples, both have had

considerable success.

This issue of representing candidate solutions in a robust

way does not arise in nature, because the method of

representation used by evolution, namely the genetic code,

is inherently robust: with only a very few exceptions, such

as a string of stop codons, there is no such thing as a

sequence of DNA bases that cannot be translated into a

protein. Therefore, virtually any change to an individual's

genes will still produce an intelligible result, and so

mutations in evolution have a higher chance of producing an

improvement. This is in contrast to human-created languages

such as English, where the number of meaningful words is

small compared to the total number of ways one can combine

letters of the alphabet, and therefore random changes to an

English sentence are likely to produce nonsense.

- The problem of how to write

the fitness function must be carefully considered so that

higher fitness is attainable and actually does equate to a

better solution for the given problem. If the fitness

function is chosen poorly or defined imprecisely, the

genetic algorithm may be unable to find a solution to the

problem, or may end up solving the wrong problem. (This

latter situation is sometimes described as the tendency of

a GA to "cheat", although in reality all that is happening

is that the GA is doing what it was told to do, not what

its creators intended it to do.) An example of this can be

found in Graham-Rowe 2002, in

which researchers used an evolutionary algorithm in

conjunction with a reprogrammable hardware array, setting

up the fitness function to reward the evolving circuit for

outputting an oscillating signal. At the end of the

experiment, an oscillating signal was indeed being produced

- but instead of the circuit itself acting as an

oscillator, as the researchers had intended, they

discovered that it had become a radio receiver that was

picking up and relaying an oscillating signal from a nearby

piece of electronic equipment!

This is not a problem in nature, however. In the laboratory

of biological evolution there is only one fitness function,

which is the same for all living things - the drive to

survive and reproduce, no matter what adaptations make this

possible. Those organisms which reproduce more abundantly

compared to their competitors are more fit; those which

fail to reproduce are unfit.

- In addition to making a

good choice of fitness function, the other parameters of a

GA - the size of the population, the rate of mutation and

crossover, the type and strength of selection - must be

also chosen with care. If the population size is too small,

the genetic algorithm may not explore enough of the

solution space to consistently find good solutions. If the

rate of genetic change is too high or the selection scheme

is chosen poorly, beneficial schema may be disrupted and

the population may enter error catastrophe, changing too

fast for selection to ever bring about convergence.

Living things do face similar difficulties, and

evolution has dealt with them. It is true that if a

population size falls too low, mutation rates are too high,

or the selection pressure is too strong (such a situation

might be caused by drastic environmental change), then the

species may go extinct. The solution has been "the

evolution of evolvability" - adaptations that alter a

species' ability to adapt. For example, most living things

have evolved elaborate molecular machinery that checks for

and corrects errors during the process of DNA replication,

keeping their mutation rate down to acceptably low levels;

conversely, in times of severe environmental stress, some

bacterial species enter a state of hypermutation

where the rate of DNA replication errors rises sharply,

increasing the chance that a compensating mutation will be

discovered. Of course, not all catastrophes can be evaded,

but the enormous diversity and highly complex adaptations

of living things today show that, in general, evolution is

a successful strategy. Likewise, the diverse applications

of and impressive results produced by genetic algorithms

show them to be a powerful and worthwhile field of

study.

- One type of problem that

genetic algorithms have difficulty dealing with are

problems with "deceptive" fitness functions (Mitchell 1996, p.125), those where

the locations of improved points give misleading

information about where the global optimum is likely to be

found. For example, imagine a problem where the search

space consisted of all eight-character binary strings, and

the fitness of an individual was directly proportional to

the number of 1s in it - i.e., 00000001 would be less fit

than 00000011, which would be less fit than 00000111, and

so on - with two exceptions: the string 11111111 turned out

to have very low fitness, and the string 00000000 turned

out to have very high fitness. In such a problem, a GA (as

well as most other algorithms) would be no more likely to

find the global optimum than random search.

The resolution to this problem is the same for both genetic

algorithms and biological evolution: evolution is not a

process that has to find the single global optimum every

time. It can do almost as well by reaching the top of a

high local optimum, and for most situations, this will

suffice, even if the global optimum cannot easily be

reached from that point. Evolution is very much a

"satisficer" - an algorithm that delivers a "good enough"

solution, though not necessarily the best possible

solution, given a reasonable amount of time and effort

invested in the search. The Evidence for Jury-Rigged

Design in Nature FAQ gives examples of this very

outcome appearing in nature. (It is also worth noting that

few, if any, real-world problems are as fully deceptive as

the somewhat contrived example given above. Usually, the

location of local improvements gives at least some

information about the location of the global

optimum.)

- One well-known problem

that can occur with a GA is known as premature

convergence. If an individual that is more fit than

most of its competitors emerges early on in the course of

the run, it may reproduce so abundantly that it drives down

the population's diversity too soon, leading the algorithm

to converge on the local optimum that that individual

represents rather than searching the fitness landscape

thoroughly enough to find the global optimum (Forrest 1993, p. 876; Mitchell 1996, p. 167). This is an

especially common problem in small populations, where even

chance variations in reproduction rate may cause one

genotype to become dominant over others.

The most common methods implemented by GA researchers to

deal with this problem all involve controlling the strength

of selection, so as not to give excessively fit individuals

too great of an advantage. Rank,

scaling and tournament selection, discussed earlier,

are three major means for accomplishing this; some methods

of scaling selection include sigma scaling, in which

reproduction is based on a statistical comparison to the

population's average fitness, and Boltzmann selection, in

which the strength of selection increases over the course

of a run in a manner similar to the "temperature" variable

in simulated annealing (Mitchell

1996, p. 168).

Premature convergence does occur in nature (where it

is called genetic

drift by biologists). This should not be surprising; as

discussed above, evolution as a problem-solving strategy is

under no obligation to find the single best solution,

merely one that is good enough. However, premature

convergence in nature is less common since most beneficial

mutations in living things produce only small, incremental

fitness improvements; mutations that produce such a large

fitness gain as to give their possessors dramatic

reproductive advantage are rare.

- Finally, several

researchers (Holland 1992, p.72;

Forrest 1993, p.875; Haupt and Haupt 1998, p.18) advise

against using genetic algorithms on analytically solvable

problems. It is not that genetic algorithms cannot find

good solutions to such problems; it is merely that

traditional analytic methods take much less time and

computational effort than GAs and, unlike GAs, are usually

mathematically guaranteed to deliver the one exact

solution. Of course, since there is no such thing as a

mathematically perfect solution to any problem of

biological adaptation, this issue does not arise in

nature.

Some specific examples of GAs

|

|

As the power of evolution gains increasingly widespread

recognition, genetic algorithms have been used to tackle a

broad variety of problems in an extremely diverse array of

fields, clearly showing their power and their potential.

This section will discuss some of the more noteworthy uses

to which they have been put.

- Acoustics

Sato et al. 2002 used genetic

algorithms to design a concert hall with optimal acoustic

properties, maximizing the sound quality for the audience,

for the conductor, and for the musicians on stage. This

task involves the simultaneous optimization of multiple

variables. Beginning with a shoebox-shaped hall, the

authors' GA produced two non-dominated solutions, both of

which were described as "leaf-shaped" (p.526). The authors

state that these solutions have proportions similar to

Vienna's Grosser Musikvereinsaal, which is widely agreed to

be one of the best - if not the best - concert hall in the

world in terms of acoustic properties.

Porto, Fogel and Fogel 1995 used

evolutionary programming to train neural networks to

distinguish between sonar reflections from different types

of objects: man-made metal spheres, sea mounts, fish and

plant life, and random background noise. After 500

generations, the best evolved neural network had a

probability of correct classification ranging between 94%

and 98% and a probability of misclassification between 7.4%

and 1.5%, which are "reasonable probabilities of detection

and false alarm" (p.21). The evolved network matched the

performance of another network developed by simulated

annealing and consistently outperformed networks trained by

back propagation, which "repeatedly stalled at suboptimal

weight sets that did not yield satisfactory results"

(p.21). By contrast, both stochastic methods showed

themselves able to overcome these local optima and produce

smaller, effective and more robust networks; but the

authors suggest that the evolutionary algorithm, unlike

simulated annealing, operates on a population and so takes

advantage of global information about the search space,

potentially leading to better performance in the long

run.

Tang et al. 1996 survey the uses of

genetic algorithms within the field of acoustics and signal

processing. One area of particular interest involves the

use of GAs to design Active Noise Control (ANC) systems,

which cancel out undesired sound by producing sound waves

that destructively interfere with the unwanted noise. This

is a multiple-objective problem requiring the precise

placement and control of multiple loudspeakers; GAs have

been used both to design the controllers and find the

optimal placement of the loudspeakers for such systems,

resulting in the "effective attenuation of noise" (p.33) in

experimental tests.

- Aerospace

engineering

Obayashi et al. 2000 used a

multiple-objective genetic algorithm to design the wing

shape for a supersonic aircraft. Three major considerations

govern the wing's configuration - minimizing aerodynamic

drag at supersonic cruising speeds, minimizing drag at

subsonic speeds, and minimizing aerodynamic load (the

bending force on the wing). These objectives are mutually

exclusive, and optimizing them all simultaneously requires

tradeoffs to be made.

The chromosome in this problem is a string of 66

real-valued numbers, each of which corresponds to a

specific aspect of the wing: its shape, its thickness, its

twist, and so on. Evolution with elitist rank selection was

simulated for 70 generations, with a population size of 64

individuals. At the termination of this process, there were

several Pareto-optimal individuals, each one representing a

single non-dominated solution to the problem. The paper

notes that these best-of-run individuals have "physically

reasonable" characteristics, indicating the validity of the

optimization technique (p.186). To further evaluate the

quality of the solutions, six of the best were compared to

a supersonic wing design produced by the SST Design Team of

Japan's National Aerospace Laboratory. All six were

competitive, having drag and load values approximately

equal to or less than the human-designed wing; one of the

evolved solutions in particular outperformed the NAL's

design in all three objectives. The authors note that the

GA's solutions are similar to a design called the "arrow

wing" which was first suggested in the late 1950s, but

ultimately abandoned in favor of the more conventional

delta-wing design.

In a follow-up paper (Sasaki et al.

2001), the authors repeat their experiment while adding

a fourth objective, namely minimizing the twisting

moment of the wing (a known potential problem for

arrow-wing SST designs). Additional control points for

thickness are also added to the array of design variables.

After 75 generations of evolution, two of the best

Pareto-optimal solutions were again compared to the

Japanese National Aerospace Laboratory's wing design for

the NEXST-1 experimental supersonic airplane. It was found

that both of these designs (as well as one optimal design

from the previous simulation, discussed above) were

physically reasonable and superior to the NAL's design in

all four objectives.

Williams, Crossley and Lang

2001 applied genetic algorithms to the task of spacing

satellite orbits to minimize coverage blackouts. As

telecommunications technology continues to improve, humans

are increasingly dependent on Earth-orbiting satellites to

perform many vital functions, and one of the problems

engineers face is designing their orbital trajectories.

Satellites in high Earth orbit, around 22,000 miles up, can

see large sections of the planet at once and be in constant

contact with ground stations, but these are far more

expensive to launch and more vulnerable to cosmic

radiation. It is more economical to put satellites in low

orbits, as low as a few hundred miles in some cases, but

because of the curvature of the Earth it is inevitable that

these satellites will at times lose line-of-sight access to

surface receivers and thus be useless. Even constellations

of several satellites experience unavoidable blackouts and

losses of coverage for this reason. The challenge is to

arrange the satellites' orbits to minimize this downtime.

This is a multi-objective problem, involving the

minimization of both the average blackout time for all

locations and the maximum blackout time for any one

location; in practice, these goals turn out to be mutually

exclusive.

When the GA was applied to this problem, the evolved

results for three, four and five-satellite constellations

were unusual, highly asymmetric orbit configurations, with

the satellites spaced by alternating large and small gaps

rather than equal-sized gaps as conventional techniques

would produce. However, this solution significantly reduced

both average and maximum revisit times, in some cases by up

to 90 minutes. In a news article about the results, Dr.

William Crossley noted that "engineers with years of

aerospace experience were surprised by the higher

performance offered by the unconventional design".

| Keane and Brown 1996 used a GA

to evolve a new design for a load-bearing truss or boom

that could be assembled in orbit and used for satellites,

space stations and other aerospace construction projects.

The result, a twisted, organic-looking structure that has

been compared to a human leg bone, uses no more material

than the standard truss design but is lightweight, strong

and far superior at damping out damaging vibrations, as was

confirmed by real-world tests of the final product. And yet

"No intelligence made the designs. They just evolved" (Petit 1998). The authors of the paper

further note that their GA only ran for 10 generations due

to the computationally intensive nature of the simulation,

and the population had not become stagnant. Continuing the

run for more generations would undoubtedly have produced

further improvements in performance. |

Figure 4: A genetically optimized three-dimensional

truss with improved frequency response. (Adapted from [1].) |

Finally, as reported in Gibbs

1996, Lockheed Martin has used a genetic algorithm to

evolve a series of maneuvers to shift a spacecraft from one

orientation to another within 2% of the theoretical minimum

time for such maneuvers. The evolved solution was 10%

faster than a solution hand-crafted by an expert for the

same problem.

- Astronomy and

astrophysics

Charbonneau 1995 suggests

the usefulness of GAs for problems in astrophysics by

applying them to three example problems: fitting the

rotation curve of a galaxy based on observed rotational

velocities of its components, determining the pulsation

period of a variable star based on time-series data, and

solving for the critical parameters in a

magnetohydrodynamic model of the solar wind. All three of

these are hard multi-dimensional nonlinear problems.

Charbonneau's genetic algorithm, PIKAIA, uses generational,

fitness-proportionate ranking selection coupled with

elitism, ensuring that the single best individual is copied

over once into the next generation without modification.

PIKAIA has a crossover rate of 0.65 and a variable mutation

rate that is set to 0.003 initially and gradually increases

later on, as the population approaches convergence, to

maintain variability in the gene pool.

In the galactic rotation-curve problem, the GA produced two

curves, both of which were very good fits to the data (a

common result in this type of problem, in which there is

little contrast between neighboring hilltops); further

observations can then distinguish which one is to be

preferred. In the time-series problem, the GA was

impressively successful in autonomously generating a

high-quality fit for the data, but harder problems were not

fitted as well (although, Charbonneau points out, these

problems are equally difficult to solve with conventional

techniques). The paper suggests that a hybrid GA employing

both artificial evolution and standard analytic techniques

might perform better. Finally, in solving for the six

critical parameters of the solar wind, the GA successfully

determined the value of three of them to an accuracy of

within 0.1% and the remaining three to accuracies of within

1 to 10%. (Though lower experimental error for these three

would always be preferable, Charbonneau notes that there

are no other robust, efficient methods for experimentally

solving a six-dimensional nonlinear problem of this type; a

conjugate gradient method works "as long as a very

good starting guess can be provided" (p.323). By contrast,

GAs do not require such finely tuned domain-specific

knowledge.)

Based on the results obtained so far, Charbonneau suggests

that GAs can and should find use in other difficult

problems in astrophysics, in particular inverse problems

such as Doppler imaging and helioseismic inversions. In

closing, Charbonneau argues that GAs are a "strong and

promising contender" (p.324) in this field, one that can be

expected to complement rather than replace traditional

optimization techniques, and concludes that "the bottom

line, if there is to be one, is that genetic algorithms

work, and often frightfully well" (p.325).

- Chemistry

High-powered, ultrashort pulses of laser energy can split

apart complex molecules into simpler molecules, a process

with important applications to organic chemistry and

microelectronics. The specific end products of such a

reaction can be controlled by modulating the phase of the

laser pulse. However, for large molecules, solving for the

desired pulse shape analytically is too difficult: the

calculations are too complex and the relevant

characteristics (the potential energy surfaces of the

molecules) are not known precisely enough.

Assion et al. 1998 solved this

problem by using an evolutionary algorithm to design the

pulse shape. Instead of inputting complex, problem-specific

knowledge about the quantum characteristics of the input

molecules to design the pulse to specifications, the EA

fires a pulse, measures the proportions of the resulting

product molecules, randomly mutates the beam

characteristics with the hope of getting these proportions

closer to the desired output, and the process repeats.

(Rather than fine-tune any characteristics of the laser

beam directly, the authors' GA represents individuals as a

set of 128 numbers, each of which is a voltage value that

controls the refractive index of one of the pixels in the

laser light modulator. Again, no problem-specific knowledge

about the properties of either the laser or the reaction

products is needed.) The authors state that their

algorithm, when applied to two sample substances,

"automatically finds the best configuration... no matter

how complicated the molecular response may be" (p.920),

demonstrating "automated coherent control on products that

are chemically different from each other and from the

parent molecule" (p.921).

In the early to mid-1990s, the widespread adoption of a

novel drug design technique called combinatorial

chemistry revolutionized the pharmaceutical industry.

In this method, rather than the painstaking, precise

synthesis of a single compound at a time, biochemists

deliberately mix a wide variety of reactants to produce an

even wider variety of products - hundreds, thousands or

millions of different compounds per batch - which can then

be rapidly screened for biochemical activity. In designing

libraries of reactants for this technique, there are two

main approaches: reactant-based design, which chooses

optimized groups of reactants without considering what

products will result, and product-based design, which

selects reactants most likely to produce products with the

desired properties. Product-based design is more difficult

and complex, but has been shown to result in better and

more diverse combinatorial libraries and a greater

likelihood of getting a usable result.

In a paper funded by GlaxoSmithKline's research and

development department, Gillet

2002 discusses the use of a multiobjective genetic

algorithm for the product-based design of combinatorial

libraries. In choosing the compounds that go into a

particular library, qualities such as molecular diversity

and weight, cost of supplies, toxicity, absorption,

distribution, and metabolism must all be considered. If the

aim is to find molecules similar to an existing molecule of

known function (a common method of new drug design),

structural similarity can also be taken into account. This

paper presents a multiobjective approach where a set of

Pareto-optimal results that maximize or minimize each of

these objectives can be developed. The author concludes

that the GA was able to simultaneously satisfy the criteria

of molecular diversity and maximum synthetic efficiency,

and was able to find molecules that were drug-like as well

as "very similar to given target molecules after exploring

a very small fraction of the total search space"

(p.378).

In a related paper, Glen and Payne

1995 discuss the use of genetic algorithms to

automatically design new molecules from scratch to fit a

given set of specifications. Given an initial population

either generated randomly or using the simple molecule

ethane as a seed, the GA randomly adds, removes and alters

atoms and molecular fragments with the aim of generating

molecules that fit the given constraints. The GA can

simultaneously optimize a large number of objectives,

including molecular weight, molecular volume, number of

bonds, number of chiral centers, number of atoms, number of

rotatable bonds, polarizability, dipole moment, and more in

order to produce candidate molecules with the desired

properties. Based on experimental tests, including one

difficult optimization problem that involved generating

molecules with properties similar to ribose (a sugar

compound frequently mimicked in antiviral drugs), the

authors conclude that the GA is an "excellent idea

generator" (p.199) that offers "fast and powerful

optimisation properties" and can generate "a diverse set of

possible structures" (p.182). They go on to state, "Of

particular note is the powerful optimising ability of the

genetic algorithm, even with relatively small population

sizes" (p.200). In a sign that these results are not merely

theoretical, Lemley 2001 reports

that the Unilever corporation has used genetic algorithms

to design new antimicrobial compounds for use in cleansers,

which it has patented.

- Electrical

engineering

A field-programmable gate array, or FPGA for short, is a

special type of circuit board with an array of logic cells,

each of which can act as any type of logic gate, connected

by flexible interlinks which can connect cells. Both of

these functions are controlled by software, so merely by

loading a special program into the board, it can be altered

on the fly to perform the functions of any one of a vast

variety of hardware devices.

Dr. Adrian Thompson has exploited this device, in

conjunction with the principles of evolution, to produce a

prototype voice-recognition circuit that can distinguish

between and respond to spoken commands using only 37 logic

gates - a task that would have been considered impossible

for any human engineer. He generated random bit strings of

0s and 1s and used them as configurations for the FPGA,

selecting the fittest individuals from each generation,

reproducing and randomly mutating them, swapping sections

of their code and passing them on to another round of

selection. His goal was to evolve a device that could at

first discriminate between tones of different frequencies

(1 and 10 kilohertz), then distinguish between the spoken

words "go" and "stop".

This aim was achieved within 3000 generations, but the

success was even greater than had been anticipated. The

evolved system uses far fewer cells than anything a human

engineer could have designed, and it does not even need the

most critical component of human-built systems - a clock.

How does it work? Thompson has no idea, though he has

traced the input signal through a complex arrangement of

feedback loops within the evolved circuit. In fact, out of

the 37 logic gates the final product uses, five of them are

not even connected to the rest of the circuit in any way -

yet if their power supply is removed, the circuit stops

working. It seems that evolution has exploited some subtle

electromagnetic effect of these cells to come up with its

solution, yet the exact workings of the complex and

intricate evolved structure remain a mystery (Davidson 1997).

Altshuler and Linden 1997 used

a genetic algorithm to evolve wire antennas with

pre-specified properties. The authors note that the design

of such antennas is an imprecise process, starting with the

desired properties and then determining the antenna's shape

through "guesses.... intuition, experience, approximate

equations or empirical studies" (p.50). This technique is

time-consuming, often does not produce optimal results, and

tends to work well only for relatively simple, symmetric

designs. By contrast, in the genetic algorithm approach,

the engineer specifies the antenna's electromagnetic

properties, and the GA automatically synthesizes a matching

configuration.

Figure 5: A crooked-wire genetic antenna

(after Altshuler and Linden

1997, figure 1). |

Altshuler and Linden used their GA to

design a circularly polarized seven-segment antenna with

hemispherical coverage; the result is shown to the left.

Each individual in the GA consisted of a binary chromosome

specifying the three-dimensional coordinates of each end of

each wire. Fitness was evaluated by simulating each

candidate according to an electromagnetic wiring code, and

the best-of-run individual was then built and tested. The

authors describe the shape of this antenna, which does not

resemble traditional antennas and has no obvious symmetry,

as "unusually weird" and "counter-intuitive" (p.52), yet it

had a nearly uniform radiation pattern with high bandwidth

both in simulation and in experimental testing, excellently

matching the prior specification. The authors conclude that

a genetic algorithm-based method for antenna design shows

"remarkable promise". "...this new design procedure is

capable of finding genetic antennas able to effectively

solve difficult antenna problems, and it will be

particularly useful in situations where existing designs

are not adequate" (p.52). |

- Financial

markets

Mahfoud and Mani 1996 used a

genetic algorithm to predict the future performance of 1600

publicly traded stocks. Specifically, the GA was tasked

with forecasting the relative return of each stock, defined

as that stock's return minus the average return of all 1600

stocks over the time period in question, 12 weeks (one

calendar quarter) into the future. As input, the GA was

given historical data about each stock in the form of a

list of 15 attributes, such as price-to-earnings ratio and

growth rate, measured at various past points in time; the

GA was asked to evolve a set of if/then rules to classify

each stock and to provide, as output, both a recommendation

on what to do with regards to that stock (buy, sell, or no

prediction) and a numerical forecast of the relative

return. The GA's results were compared to those of an

established neural net-based system which the authors had

been using to forecast stock prices and manage portfolios

for three years previously. Of course, the stock market is

an extremely noisy and nonlinear system, and no predictive

mechanism can be correct 100% of the time; the challenge is

to find a predictor that is accurate more often than

not.

In the experiment, the GA and the neural net each made

forecasts at the end of the week for each one of the 1600

stocks, for twelve consecutive weeks. Twelve weeks after

each prediction, the actual performance was compared with

the predicted relative return. Overall, the GA

significantly outperformed the neural network: in one trial

run, the GA correctly predicted the direction of one stock

47.6% of the time, made no prediction 45.8% of the time,

and made an incorrect prediction only 6.6% of the time, for

an overall predictive accuracy of 87.8%. Although the

neural network made definite predictions more often, it was

also wrong in its predictions more often (in fact, the

authors speculate that the GA's greater ability to make no

prediction when the data were uncertain was a factor in its

success; the neural net always produces a prediction unless

explicitly restricted by the programmer). In the 1600-stock

experiment, the GA produced a relative return of +5.47%,

versus +4.40% for the neural net - a statistically

significant difference. In fact, the GA also significantly

outperformed three major stock market indices - the S&P

500, the S&P 400, and the Russell 2000 - over this

period; chance was excluded as the cause of this result at

the 95% confidence level. The authors attribute this

compelling success to the ability of the genetic algorithm

to learn nonlinear relationships not readily apparent to

human observers, as well as the fact that it lacks a human

expert's "a priori bias against counterintuitive or

contrarian rules" (p.562).

Similar success was achieved by Andreou, Georgopoulos and Likothanassis

2002, who used hybrid genetic algorithms to evolve

neural networks that predicted the exchange rates of

foreign currencies up to one month ahead. As opposed to the

last example, where GAs and neural nets were in

competition, here the two worked in concert, with the GA

evolving the architecture (number of input units, number of

hidden units, and the arrangement of the links between

them) of the network which was then trained by a filter

algorithm.

As historical information, the algorithm was given 1300

previous raw daily values of five currencies - the American

dollar, the German deutsche mark, the French franc, the